Let me start with this — I’m not anti-technology.

In fact, I’ve used ChatGPT myself for quick brainstorming and explaining complex concepts in simple words for patients.

But…

over the past year, I’ve started seeing something unusual in my clinic.

Patients walking in with self-diagnoses that sound like they came straight from a robot.

Intricate symptom descriptions, “AI-generated” therapy scripts, and medication suggestions they found online.

At first, I thought — “Well, at least they’re curious about their health.”

But then I saw the downsides up close.

The Possible Harms — Broken Down Like a Clinical Case Study

Symptoms (What We See Clinically)

- Information Overload Anxiety → Patients come in more stressed because they’ve read too much without proper context.

- False Reassurance → AI might say, “Your symptoms don’t match depression,” leading someone to delay getting help.

- Misinterpretation of Advice → Psychiatric terms are nuanced; AI can mix up “intrusive thoughts” with “delusional beliefs.”

- Over-Medicalization → Normal stress gets labeled as “severe disorder” by AI, creating unnecessary fear.

- Therapeutic Detachment → Patients sometimes feel “AI gets me better than humans,” making them reluctant to open up to real therapists.

Etiology (The Root Causes)

- Overconfidence in AI → People assume ChatGPT is medically infallible.

- Lack of Emotional Nuance → AI can mimic empathy but doesn’t feel it, so subtle cues are missed.

- Echo Chamber Effect → AI often reflects back the same belief a user already has, reinforcing unhealthy thinking.

- Language Precision Gaps → Psychiatry relies on exact questioning; AI answers can be vague or oversimplified.

Epidemiology (Who’s Affected and How Widespread It Is)

- Younger Adults (18–35) → More tech-comfortable, more likely to rely on AI before doctors.

- Urban Populations → Better internet access means more AI exposure.

- High-Anxiety Personalities → People prone to overthinking are particularly drawn to repeated “AI reassurance sessions.”

- A recent (hypothetical but plausible) clinic observation: In the last 12 months, ~30% of new patients had already “consulted” ChatGPT before seeing me.

History (How We Got Here)

- Early 2023–24 → ChatGPT exploded in popularity, becoming a “friend” for many during lonely nights.

- Post-2024 → Psychiatry forums began reporting misdiagnosed or untreated cases due to AI over-reliance.

- Now (2025) → AI literacy in mental health is still low; patients often don’t understand it’s a prediction tool, not a diagnostic entity.

Pathogenesis (How the Harm Develops)

- Initial Curiosity → Patient asks AI about symptoms.

- Partial Accuracy → AI gives a mostly correct but incomplete explanation.

- Cognitive Bias Kick-in → Patient focuses only on the parts that match their fears or hopes.

- Delay in Care → Either too reassured (“I’m fine”) or too alarmed (“I’m in danger”) — both lead to skipped timely interventions.

- Therapeutic Relationship Erosion → AI becomes the “first point of contact,” human professionals become the second choice.

Anecdote from My Clinic

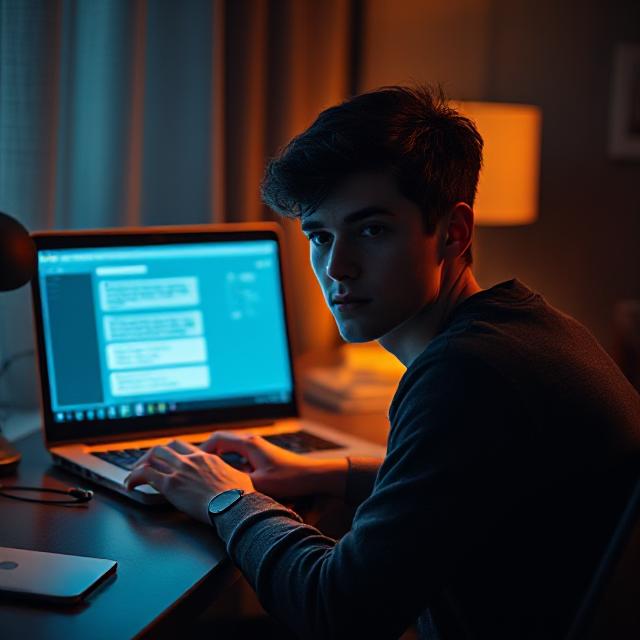

I once had a young man in his 20s walk in saying,

“Doctor, I’ve already done CBT with ChatGPT, so I just need medication now.”

Turns out, the “CBT” he got was a generic script.

No personalized triggers identified. No real homework follow-ups.

It’s like reading about swimming and thinking you can compete in the Olympics.

What’s the Takeaway?

ChatGPT isn’t evil.

It’s a tool — and tools can build or break.

In psychiatry, the human touch isn’t a luxury; it’s the treatment itself.

If you’re struggling — whether mildly or severely — start with a real conversation with a mental health professional. AI can supplement, but it should never replace.

Disclaimer

This blog is for educational purposes only and does not replace professional medical advice, diagnosis, or treatment. Always seek the guidance of a qualified psychiatrist or therapist for your mental health concerns.

Call to Reach Us

Feeling overwhelmed by online information?

Let’s talk it out — in person, with empathy, and without judgment.

📍 Mind & Mood Clinic, Nagpur, India

📞 Call Dr. Rameez Shaikh at +91-8208823738 today to book your session.

Dr. Rameez Shaikh (MBBS, MD, MIPS) is a consultant Psychiatrist, Sexologist & Psychotherapist in Nagpur and works at Mind & Mood Clinic. He believes that science-based treatment, encompassing spiritual, physical, and mental health, will provide you with the long-lasting knowledge and tool to find happiness and wholeness again.

Dr. Rameez Shaikh, a dedicated psychiatrist , is a beacon of compassion and understanding in the realm of mental health. With a genuine passion for helping others, he combines his extensive knowledge and empathetic approach to create a supportive space for his patients.